Using Mass Data to Open a New Era of Energy Exploration for Sinopec Geophysical Research Institute

Продукти, рішення, послуги для організацій

Sinopec Geophysical Research Institute (SGRI), a subsidiary of the China Petroleum & Chemical Corporation (Sinopec), acts as an advisory body for the development of geophysical prospecting technology in China. In addition, it serves as a Research and Development (R&D) center for advanced and core geophysics technology as well as geophysical software, helping to popularize the use of each, along with providing technical support to important geophysical prospecting projects.

In total, SGRI has taken part in more than 1100 such projects, suggesting hundreds of locations for exploratory and development wells to be drilled, which has led to many national awards. With scientific data analytics underpinning its work, the institute counts more than 2000 Central Processing Unit (CPU) nodes and over 400 Graphics Processing Unit (GPU) nodes as its own, representing combined computing performance of 15 Peta Floating-point Operations Per Second (PFLOPS) and 28 PB of storage capacity.

Seismic waves are the most commonly used method for oil and gas exploration. The process generates a massive amount of data, typically 1–2 TB of 2D data and hundreds of TB or even PB-levels of 3D data. With global oil reserves diminishing, oil and gas exploration is becoming more and more difficult, of course, and it's taking increasingly longer periods of time and repeated attempts to determine the precise location of oil and natural gas layers. But when this does happen, there's a sudden surge in data volumes, with heavy pressure placed on data analytics workloads. In short, traditional data processing systems are starting to show the strain.

Information silos are an issue for traditional application systems: basic functions such as user rights and logs are configured separately, system by system, making it difficult for systems to communicate with each other.

Low resource utilization is inefficient and wasteful. Devices purchased in different phases have varied configurations, making unified scheduling hard. The knock-on effect is a low rate of CPU utilization, which simply can't be improved.

Inconsistent software and hardware configurations and standards are the result of software and hardware also purchased in different phases. This causes inconsistencies in technical architectures and standards, which gets in the way of truly efficient Operations and Maintenance (O&M).

Finally, an inconveniently large number of application systems is a real headache for users, making flexible function customization impossible.

Based on storage-compute decoupling architecture, Huawei OceanStor mass data storage builds a converged seismic data processing solution, with a shared storage layer implementing fast data mobility and highly efficient data processing.

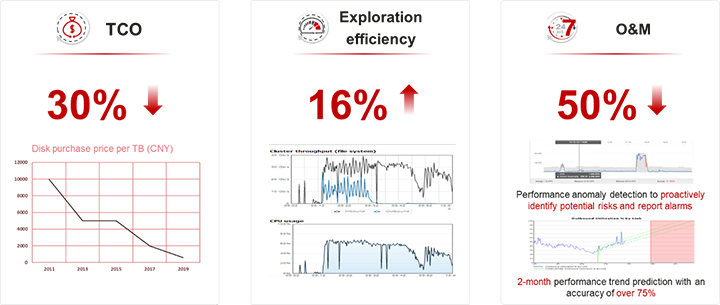

This shared storage layer now consolidates and shares storage resources for SGRI, reducing procurement costs. In addition, the quantity of cabinets required has fallen by 40%, in turn slashing power consumption and cooling costs. Overall, Total Cost of Ownership (TCO) has been cut by 30%.

The high-bandwidth, low-latency storage layer greatly increases the throughput of the entire High-Performance Computing (HPC) cluster, reducing Input/Output (I/O) wait times and improving the CPU parallel processing efficiency of the computing cluster. For SRGI, the CPU utilization rate is now stable, at over 60%, and the data processing efficiency of seismic exploration has been improved by more than 16%.

OceanStor mass data storage also brings with it strong self-maintenance capabilities. Based on fault and performance trend predictions, the storage system proactively identifies risks to rectify or prevent them in advance. This ensures 24/7 service availability and reduces O&M workloads by an impressive figure — more than 50%.