Este sitio utiliza cookies. Si continúa navegando en este sitio, acepta nuestro uso de cookies. Lea nuestra política de privacidad>

![]()

Este sitio utiliza cookies. Si continúa navegando en este sitio, acepta nuestro uso de cookies. Lea nuestra política de privacidad>

![]()

Productos, soluciones y servicios empresariales

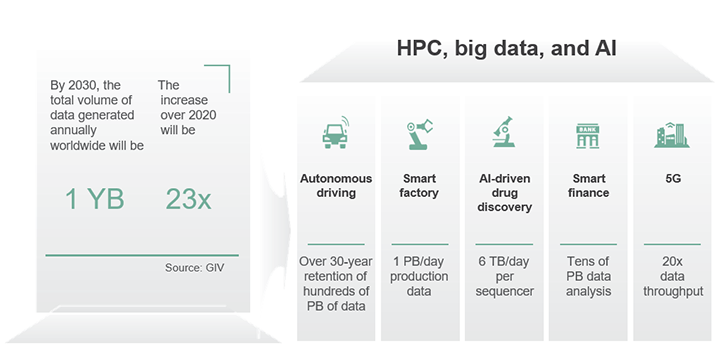

In today's digital age where mass data reigns supreme, new technologies like high-performance computing (HPC), big data analytics, and artificial intelligence (AI) are driving the emergence of new applications.

The following are a few examples of the importance of mass data in modern industry. During autonomous driving R&D, hundreds of PB of data for AI training needs to be stored for decades; the production data of a smart factory reaches 1 PB in a single day; and in the field of AI-driven drug discovery, a sequencer can generate 6 TB of data per day. In light of such requirements, the HPC industry is evolving from compute-intensive to data-intensive, and high-performance data analytics (HPDA) has become a significant development trend.

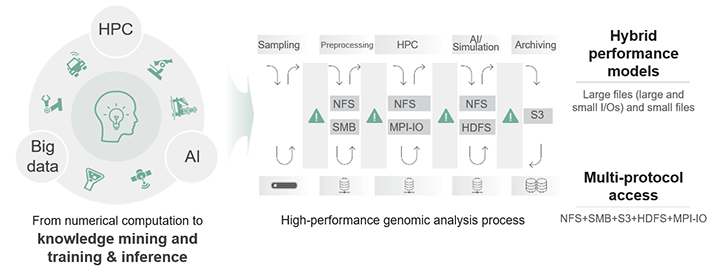

Let's look at a more specific example. In the genomic analysis process, there are various performance and access needs, like the processing of files of different sizes, different response latencies, and hybrid protocol access across multiple phases (such as NFS for standard file access, MPI-IO for parallel access, HDFS for big data analytics, and S3 for data archiving). Such service flows share a typical feature of having hybrid workloads and therefore, a system that can host multiple workloads is desirable. This leads to the question of how to efficiently analyze mass data to maximally unleash its infinite potential and value.

Huawei OceanStor Pacific scale-out storage is purpose-built for emerging applications, and its unique SmartBalance fully balanced system design is specially developed for hybrid workloads.

SmartBalance intelligently senses data types and I/O flows to effectively improve efficiency of hybrid workloads. It adopts three ground-breaking innovations:

• Multi-protocol capability balancing for native seamless interworking between protocols of file and object storage and big data.

• Dynamic resource scheduling balancing, which enables data flows adaptive to large and small I/Os for optimal bandwidth, IOPS, and OPS performance.

• Large and small I/O path balancing for optimal latency and disk utilization.

The three innovations are described in detail below.

Traditionally, object storage systems use a flat indexing structure, which enables a single bucket to support hundreds of billions of objects. File systems, on the other hand, use a tree indexing structure, which facilitates file management. However, the number of files supported by a single directory is limited.

Unlike traditional multi-protocol interworking, which employs gateways and plug-ins to convert storage formats, OceanStor Pacific uses converged indexing for unstructured data to leverage the advantages of both file and object protocols. The multi-protocol interworking technology of OceanStor Pacific adopts a tree-like structure, where the metadata is stored in lexicographical order in an oversized directory. In this directory, there are multiple subdirectories that are invisible to external systems, and each subdirectory contains multiple single-layer index fragments. This structure not only retains the excellent scalability of a single bucket, but also ensures high performance and low latency of data access. In addition, these single-layer index fragments support multipart upload of an object and allow the file service to access multiple parts of a file without modifying upper-layer applications.

In short, OceanStor Pacific achieves multi-protocol interworking without any physical or logical gateways, and without losing semantics or compromising performance.

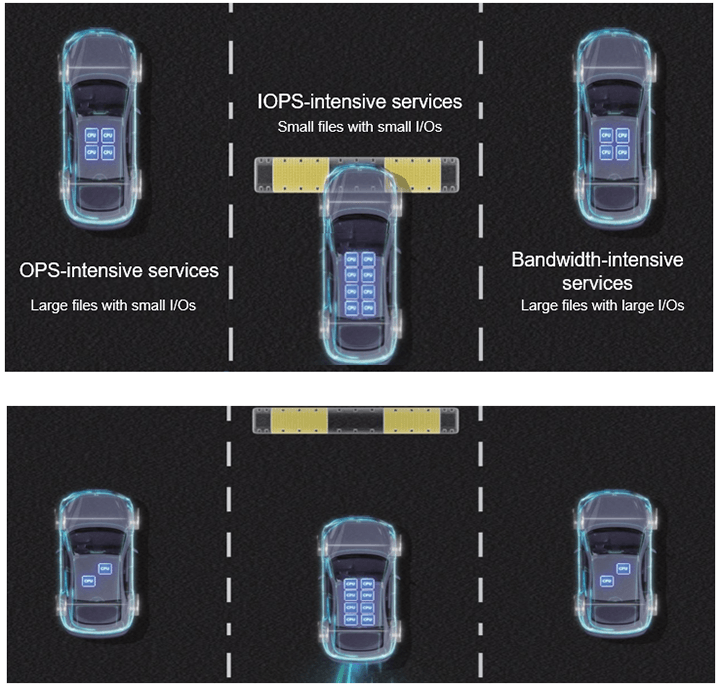

As previously described, hybrid workloads come with various I/O models, such as large files with large I/Os, large files with small I/Os, and small files with small I/Os. As data flows are adaptive to large and small I/Os, storage needs dynamic resource scheduling balancing to achieve optimal bandwidth, IOPS, and OPS performance.

First, let's have a look at dynamic allocation of CPU resources. This technique groups CPU cores and binds cores with I/O requests to ensure low processing latency of mission-critical applications. The number of cores in a group is dynamically adjusted to suit IOPS- and bandwidth-intensive workloads, thereby ensuring an optimal CPU resource configuration ratio under hybrid workloads. Intelligent CPU scheduling and the use of separate queues for large and small I/O requests reduce the CPU switchover latency. In addition, intelligent I/O scheduling ensures the I/O priorities of data read, data write, and advanced features, resulting in a consistent latency for mission-critical applications.

Equipped with these advantages, OceanStor Pacific provides high IOPS for hybrid workloads so that the bandwidth service level agreement (SLA) can be fully met.

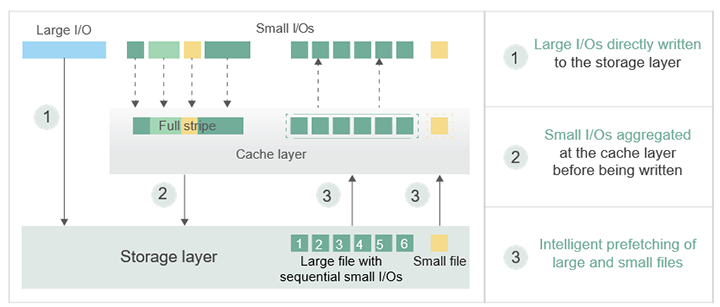

Lastly, let's take a look at path balancing, which is designed to improve the processing of large and small I/Os. OceanStor Pacific makes data flows adaptive to large and small I/Os.

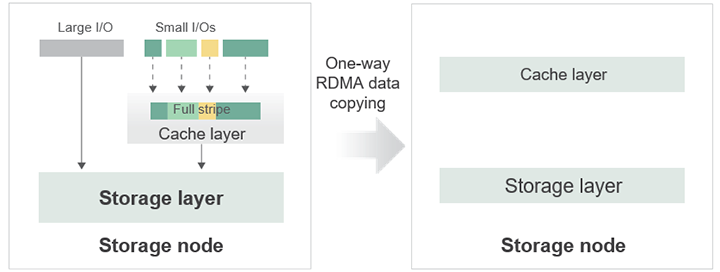

Large I/Os are passed through from clients to disks, reducing path overheads, while small I/Os are aggregated at the cache layer and then written to disks, greatly decreasing the number of I/O interactions. Thanks to intelligent stripe aggregation, a large number of random write I/Os are aggregated into 100% sequential write I/Os before being written to disks, reducing the strip overhead of disks.

In contrast to traditional small I/O aggregation, OceanStor Pacific implements data mirroring based on one-way remote direct memory access (RDMA) to ensure the reliability of I/O aggregation. Only the primary storage node is involved in data copying between CPUs and memory. Compared with traditional cache mirroring, OceanStor Pacific further reduces the CPU overhead by 30%, improving IOPS while ensuring low latency.

In terms of data read, a data prefetch design that features intelligent sensing enables the storage system to read a large file through consecutive I/Os and prefetch small files to the high-speed cache, significantly boosting data access efficiency.

Huawei's advanced design, both in terms of architecture and technology, implements native multi-protocol interworking and adaptive data processing to deliver optimal bandwidth, IOPS, and OPS performance. Powered by SmartBalance, a fully balanced system design, OceanStor Pacific is ready to embrace diverse hybrid workloads, making it an ideal choice for customers to further extract the value of mass data in the yottabyte era.

Disclaimer: The views and opinions expressed in this article are those of the author and do not necessarily reflect the official policy, position, products, and technologies of Huawei Technologies Co., Ltd. If you need to learn more about the products and technologies of Huawei Technologies Co., Ltd., please visit our website at e.huawei.com or contact us.