このサイトはCookieを使用しています。 サイトを閲覧し続けることで、Cookieの使用に同意したものとみなされます。 プライバシーポリシーを読む>

![]()

このサイトはCookieを使用しています。 サイトを閲覧し続けることで、Cookieの使用に同意したものとみなされます。 プライバシーポリシーを読む>

![]()

企業ユーザー向け製品、ソリューション、サービス

The Internet connects more than four billion users worldwide and supports emerging digital applications such as Virtual Reality (VR) and Augmented Reality (AR), 16K video, autonomous driving, Artificial Intelligence (AI), 5G, and Internet of Things (IoT). In addition, by merging online and offline services in the education, medical, and office sectors, it has an impact on every aspect of our lives.

A Data Center Network (DCN), as the infrastructure for the development of Internet services, has moved from GE and 10 GE networks to the 25 GE access + 100 GE interconnection phase.

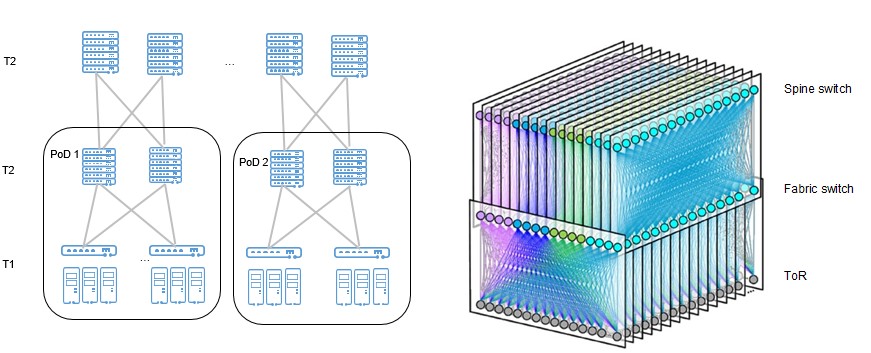

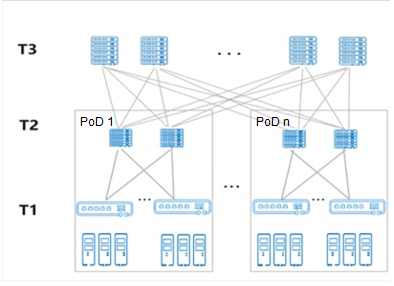

In 25 GE access + 100 GE interconnection architecture, a DCN implements large-scale access through three-layer networking. A single cluster can contain more than 100,000 servers.

As shown in the following figure, Point of Deployments (PoDs) at T1 and T2 layers can be flexibly expanded in much the same manner as blocks, and therefore constructed on demand.

With the improvement of large-capacity forwarding chips and the reduction of 100 GE optical interconnection costs, single-chip switches are used to construct a 100 GE interconnection network. This single-chip multi-plane interconnection solution typically features 12.8 Tbit/s chips. A single chip provides 128 x 100 GE port density, and a single PoD can connect to 2000 servers.

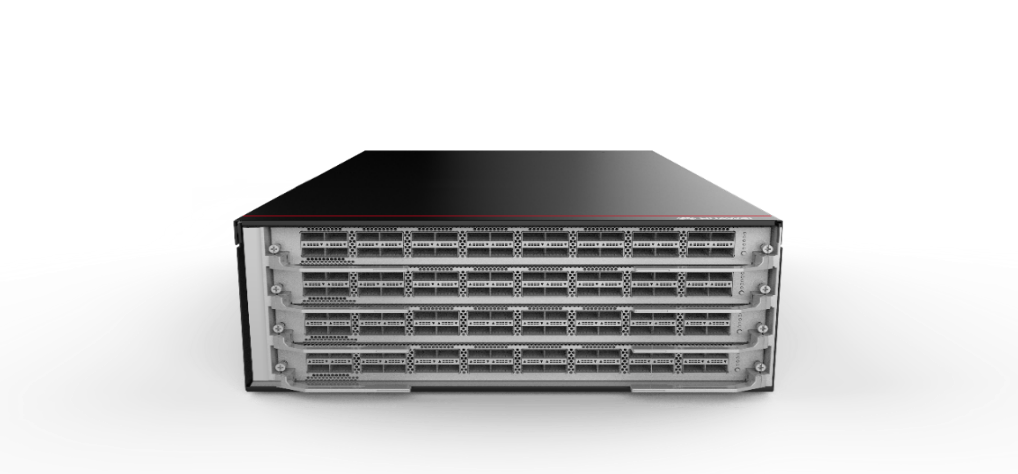

Figure 1-1: Typical 128-port 100 GE high-density fixed switch

Compared with a traditional solution composed of fixed and modular devices, a full-fixed-device networking solution increases the number of network nodes and optical interconnection modules between devices, which also increases the Operations and Maintenance (O&M) workload. However, a high-performance forwarding chip is introduced to effectively reduce the per-bit cost of DCN ports, which is obviously attractive to large Internet enterprises. On one hand, large Internet enterprises can quickly introduce 100 GE full-fixed-device architecture to reduce network construction costs; on the other, they can use their strong Research and Development (R&D) capabilities to improve automatic network deployment and maintenance capabilities in order to cope with the increased O&M workload.

As a result, large Internet enterprises often use the same 100 GE network solution, and full-fixed-device networking has become the basis for 100 GE network architecture evolution.

The 25 GE access + 100 GE interconnection solution promotes unified chip selection and rapid growth, demonstrating how technology dividends drive rapid evolution of Internet Data Center (IDC) network architecture. With the launch of single-chip network products, 100 GE inter-generational technology dividends are now available.

Given the inevitable bandwidth upgrade associated with such continuous and rapid service development, enterprises now face a choice: 200 GE or 400 GE?

Networks are never isolated, and the overall environment of the industry determines whether technologies can advance and become mature.

First, let's review the current status of the 200 GE and 400 GE industries from the perspective of network standards, servers, and optical modules.

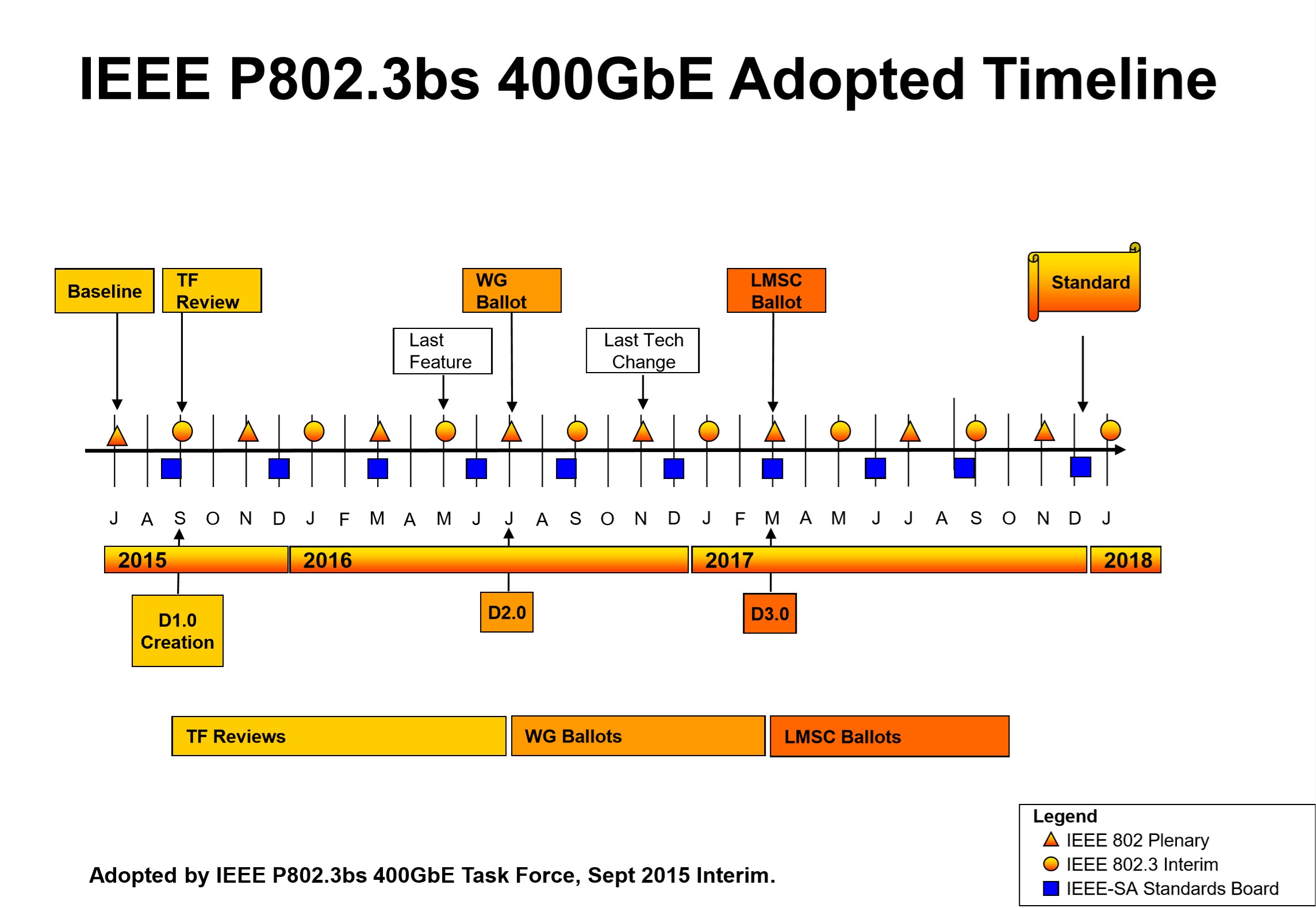

During the evolution of Institute of Electrical and Electronics Engineers (IEEE) standards, work on the 200 GE standard began after the 400 GE standard.

After completing the Bandwidth Assessment I (BWA I) project survey, the IEEE 802.3 Ethernet Working Group initiated a project to formulate the 400 GE standard in 2013. In 2015, the IEEE established the 802.3cd project and began to formulate the 200 GE standard in order to further expand the market scope and include 50 GE server and 200 GE switch specifications.

As 200 GE standards are derived from their 400 GE equivalents, 200 GE single-mode optical module specifications were finally included in the 802.3bs project. By then, the main designs of Physical Coding Sublayer (PCS), Physical Medium Attachment (PMA), and Physical Medium Dependent (PMD) had been completed for 400 GE. The 200 GE single-mode optical module specifications are generally formulated based on half of those for 400 GE.

On December 6, 2017, IEEE 802 approved the IEEE 802.3bs 400 GE Ethernet standard, including 400 GE Ethernet and 200 GE Ethernet single-mode optical module specifications, and the standard was officially released. IEEE 802.3cd defined the 200 GE Ethernet multimode optical module standard, which was officially released in December 2018.

Figure 1-2: IEEE 802.3bs 400 GE standard milestone

As described in the following table, 400 GE supports all-scenario standards, including 100 m, 500 m, 2 km, and long distance 80 km.

| Distance | Standard | Name | Rate Electrical Port |

Rate Optical Port |

| 100 m | IEEE 802.3cd IEEE 802.3cm |

200G SR4 400G SR8 400G SR4.2 |

4 x 56 GE 8 x 56 GE 8 x 56 GE |

4 x 50 GE 8 x 50 GE 8 x 50 GE |

| 500 m | IEEE 802.3bs | 400G DR4 | 8 x 56 GE | 4 x 110 GE |

| 2 km | IEEE 802.3bs IEEE 802.3bs 100G Lambda MSA |

200G FR4 400G FR8 400G FR4 |

4 x 56 GE 8 x 56 GE 8 x 56 GE |

4 x 50 GE 8 x 50 GE 4 x 100 GE |

| 10 km 6 km |

IEEE 802.3bs 100G Lambda MSA |

400G LR8 400G LR4 |

8 x 56 GE 8 x 56 GE |

8 x 50 GE 4 x 100 GE |

| 80 km | OIF | 400G ZR | 8 x 56 GE | DP-16QAM |

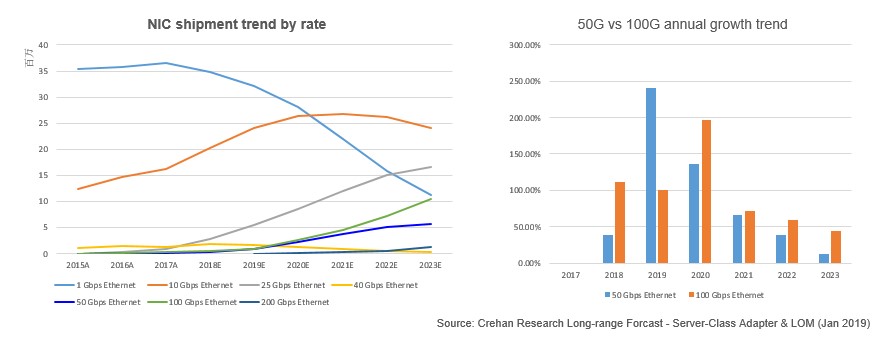

Figure 1-3: Forecast on the shipment trend of NICs and servers

As per predictions from Crehan, 50 GE and 100 GE Network Interface Cards (NICs) have been successfully delivered since 2019. The entire industry was on the fence over next generation upgrade to 25 GE NICs in 2018 and 2019. And, despite a reversal in shipment numbers in 2019, the 100 GE server market surpassed that of 50 GE by 2020, as the industry became fully confident in 100 GE servers.

Two mainstream Central Processing Unit (CPU) chip vendors — Intel and AMD — will launch Peripheral Component Interconnect Express (PCIe) 4.0 chips in Q3, 2020, capable of 50 Gbit/s Input/Output (I/O). For high-end applications, I/O can reach speeds of 100 Gbit/s and 200 Gbit/s. Both vendors are expected to launch additional chips in the first half of 2021 featuring increased I/O speeds of 100 Gbit/s, rising to 400 Gbit/s[1] for high-end applications.

In the context of chip development and server delivery prediction, 100 GE servers will quickly become the mainstream.

As data center access servers evolve from 25 GE to 100 GE, should 200 GE or 400 GE be selected for the current 100 GE interconnection network?

| Item | 10 GE Access and 40 GE Interconnection |

25 GE Access and 100 GE Interconnection |

| Bandwidth | A | 2.5A |

| Cost | C | C |

| Power Consumption | B | B |

As data center servers evolve from 10 GE to 25 GE, and network interconnection is upgraded from 40 GE to 100 GE, bandwidth doubles but interconnection costs and power consumption remain unchanged, meaning the actual cost and power consumption per Gbit/s interconnection is effectively reduced by half. As a result, 100 GE is replacing 40 GE to become the mainstream network interconnection solution in the 25 GE era.

200 GE and 400 GE optical modules are different. Traditional optical modules use Non-Return-to-Zero (NRZ) signal transmission technology, where high and low signal levels are used to represent the digital logic signals 0 and 1, and one bit of logic information can be transmitted in each clock cycle. Both 200 GE and 400 GE optical modules use Pulse Amplitude Modulation 4 (PAM4), a high-order modulation technology which uses four signal levels for transmission. Two bits of logical information — 00, 01, 10, and 11 — can be transmitted in each clock period.

Consequently, under the same baud rate, the bit rate of a PAM4 signal is twice that of an NRZ signal, doubling transmission efficiency and reducing transmission costs. From the perspective of optical module composition, 200 GE and 400 GE modules both use 4-lane mainstream architecture, and feature similar module design costs and power consumption.

| Optical Module | 200 GE | 400 GE |

| Modulation Mode | PAM4 | PAM4 |

| Implementation | 4 x 50 GE | 4 x 100 GE |

| High Design Cost | C | C |

| Power Consumption | B | B |

As the bandwidth of a 400 GE module is twice that of a 200 GE module, the technical cost and power consumption of a 400 GE module are half that of a 200 GE module.

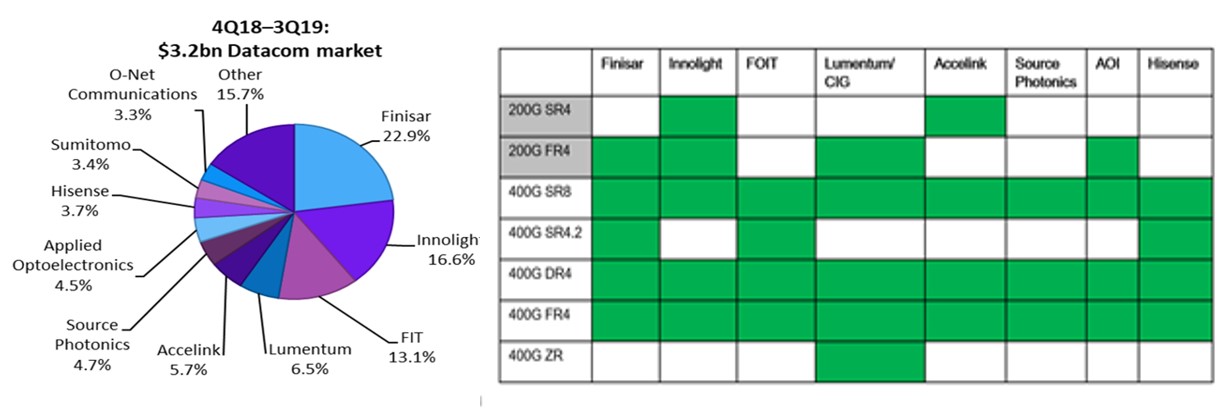

In addition to architecture design, module costs also depend on the scale of deployment. According to the delivery data of third-party consulting company Omdia (originally known as OVUM), the layout of 200 GE and 400 GE optical modules provided by the top eight suppliers is as follows.

As shown in the preceding figure, 200 GE modules are classified into 100 m SR4 and 2 km FR4 modules. Among the 200 GE modules, only 100 m SR4 modules are classified into five types. The top eight vendors have deployed 100 m, 500 m, and 2 km modules. In contrast, the 400 GE industry is far more mature, offering a wide array of choices for customers.

This analysis further proves that PAM4 technology increases technical costs and power consumption. In the DCN field, which is sensitive to both costs and power consumption, the industry requires urgent evolution from 200 GE to the more efficient and competitive 400 GE.

DCNs are designed around the delivery of services. With this in mind, fast-growing digital construction will drive the rapid growth of 100 GE servers and cement them as the mainstream option in 2020. In terms of costs, data center optical components account for more than half of the total cost of network devices. With the introduction of PAM4, the cost of a single bit of a 400 GE optical component is cheaper than that of a 200 GE optical module. Such a reduction in the deployment cost of an optical module directly lowers overall network construction costs.

In general, 400 GE is enjoying strong momentum, while 200 GE may become a temporary transition or even skipped over entirely.

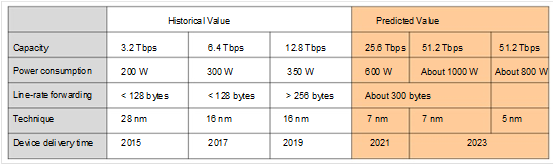

As the access and interconnection devices of data center servers, switches provide larger capacity as server I/Os increase. The switching capacity of core component forwarding chips doubles every generation. However, the challenge of doubling forwarding chip capacity is far greater than doubling the capacity of NICs, in order to provide high bandwidth for the many servers connected to them.

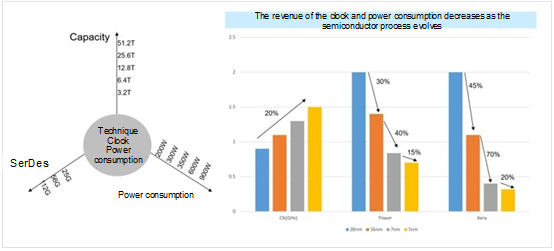

The preceding figure shows the Performance, Power, and Area (PPA) of the semiconductor process. The operational clock frequency of the chip lowers the performance by 20%, so additional area and power must be added to improve performance. As the area of the forwarding chip increases, so too does the power consumption, eventually leading to a power consumption bottleneck. A more advanced semiconductor technology is required to avoid such a restrictive bottleneck.

A typical 128-port 100 GE high-density fixed switch is used here as an example. The switch uses the 12.8 Tbit/s chip 16 nm process, and the chip's power consumption is approximately 350 W. The maximum power consumption of the switch with 100 GE optical modules is 1998 W, and it is estimated that the maximum power consumption of a 25.6 Tbit/s device with 128 x 200 GE is 3000 W. As such, the power consumption of the entire device and the demands placed on the chip's single-point heat dissipation capability continue to increase, posing great challenges to the engineering design of network devices.

If a 400 GE network node needs to achieve the same performance of a 128-port network node on a 100 GE network, the forwarding chip performance must reach 51.2 Tbit/s. If future 51.2 Tbit/s chips continue to use 7 nm process technology, the estimated chip power consumption will reach 1000 W, which is not practical for fixed devices based on the current heat dissipation process.

As a result, the 51.2 Tbit/s forwarding chip is used to build a high-density 128-port 400 GE fixed switch, which relies on an upgrade to 5 nm or 3 nm chip technology. This 5 nm or 3 nm chip technology reduces the power consumption of the forwarding chip to less than 900 W. If the 5 nm or 3 nm chip process is used, chips can be produced at scale and delivered in 2023.

The commercial delivery of high-density 400 GE switch chips (51.2 Tbit/s) has been delayed, and there are currently three options available for network devices.

Option 1: High-density 200 GE fixed device. 128 x 200 GE ports are available using a 25.6 Tbit/s chip.

Option 2: Low-density 400 GE fixed device. 4 x 400 GE ports are available using a 25.6 Tbit/s chip.

Option 3: High-density 400 GE modular switch. Multiple chips are stacked to provide higher-density 400 GE ports, and 400 GE modular switches are provided to meet the 128 x 400 GE (or even higher) port density requirements.

100 GE servers will soon become the mainstream, and 400 GE optical connections are positioned to be the most cost-effective. However, given the current immaturity of the 51.2 Gbit/s (128 x 400 GE) forwarding chip, enterprises that have deployed a 100 GE full-fixed-device architecture are reluctant to consider 200 GE. As such, if 200 GE is selected, direct evolution to 400 GE will likely be abandoned. As a result, repeated investment made in 200 GE sees optical interconnection costs accounting for more than half of the entire DCN construction cost. Consequently, the 200 GE solution is unable to take full advantage of 400 GE technology dividends.

If 64 x 400 GE fixed switches are used for networking, the port density of T2 devices is half that of 128 ports in the 100 GE network architecture, and the number of access servers in the PoD is also half that in the 100 GE network architecture. In addition, 64 x 400 GE network devices are also used at the T3 layer. As a result, the number of servers is reduced by half, and the scale of the entire server cluster is reduced to 25% of the original scale. Throughout the development of DCNs, the rate is upgraded while the size of existing server clusters is ensured. Low-port-density 400 GE network devices will greatly reduce the server cluster scale, which may fail to meet requirements of service applications.

Let's review the history of 100 GE network evolution. In the early stages, the development of cloud computing services and compute resource virtualization technologies promoted the maturity of 100 GE industry standards. 25 GE access servers gradually saw widespread use, and the rapid growth of 100 GE optical interconnection further reduced costs.

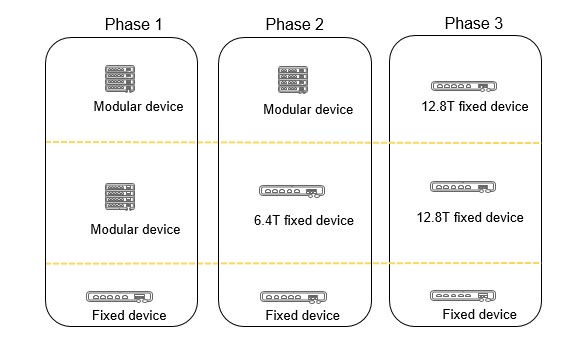

Once the industry matured and the 100 GE network era arrived, high-performance 100 GE forwarding chips lagged behind and could not be obtained during the initial phase of 100 GE network construction (phase 1 in the following figure). The industry initially used a multi-chip solution to build high-density 100 GE modular switches, and this ensured that the network scale met expectations while maximizing the technical dividends of 100 GE networks.

With the upgrade of chip performance and the launch of 6.4 Tbit/s and 12.8 Tbit/s chips, the network smoothly evolved from a 100 GE modular switch to a 100 GE fixed switch (phases 2 and 3 in the following figure).

400 GE networks will evolve in a similar manner, and while 51.2 Tbit/s chip capability is currently unavailable, multi-chip 400 GE modular switches are the better choice.

High-density 400 GE modular devices can be deployed to maintain or even expand the network scale and reduce single-bit costs. Mainstream vendors within the industry have already released 400 GE modular devices, which will promote the commercial use of 400 GE networks. With the launch of 51.2 Tbit/s switching chips, 400 GE architecture with fixed and modular devices can smoothly evolve to full-fixed-device architecture, as it finally becomes the mainstream architecture of DCNs in the 400 GE era.

[1]https://wccftech.com/amd-zen-3-epyc-milan-and-zen-4-epyc-genoa-server-cpu-detailed