Produkte, Lösungen und Services für Unternehmen

Smartphones, PCs & Tablets, Wearables, mobiles Breitband und mehr

Über Huawei, Nachrichten, Veranstaltungen, Brancheneinblicke und mehr

Disclaimer: The views and opinions expressed in this article are those of the author and do not necessarily reflect the official policy, position, products, and technologies of Huawei Technologies Co., Ltd. If you need to learn more about the products and technologies of Huawei Technologies Co., Ltd., please visit our product pages or contact us.

Speed is the ultimate weapon to survive and thrive in fierce competition. Today, NVMe provides the fastest speed possible to help you achieve your goals.

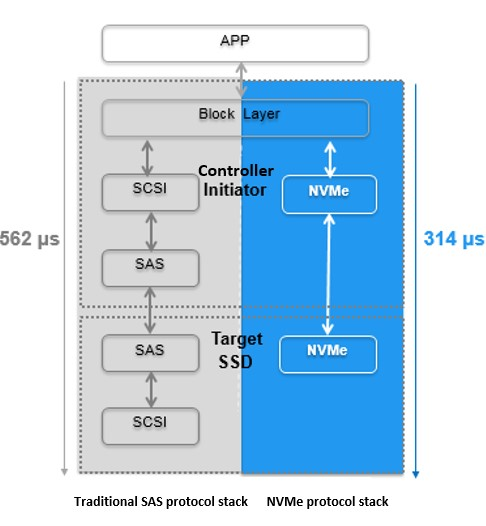

Designed for HDDs, traditional SAS protocol hinders SSD performance due to complex system architectures, excessive numbers of protocol to be parsed, and limited queue concurrences. NVM Express formulated NVMe protocol standards, and replaced complex protocol layers such as I/O Scheduler and SCSI in the SAS system with lightweight NVMe protocol. As a result, NVMe is a quicker, smarter, and more intuitive option for enterprises, evident with its superb performance in all-flash arrays (AFAs).

Huawei has extensively researched the NVMe protocols, covering only end-end development of NVMe flash controllers, NVMe all-flash OSs, and NVMe SSDs in the industry. Besides, powered by NVMe architecture with disk-controller collaboration, NVMe all-flash storage eventually ensures a stable latency of 0.5 ms.

Architecture: SAS vs. NVMe

NVMe-based AFAs outperform SAS-based AFAs, but the question remains: how?

First, at the transmission layer, SAS-based AFAs deliver I/Os from CPUs to SSDs through the following paths:

Step 1: I/Os are transmitted from CPUs to SAS chips through the PCIe links and switches. Step 2: I/Os are converted into SAS packets before arriving at SSDs through the SAS switching chips.

Transmission path: SAS vs. NVMe

For NVMe-based AFAs, I/Os are transmitted from CPUs to SSDs through the PCIe links and switches. CPUs of the NVMe-based AFAs directly communicate with NVMe SSDs over a shorter transmission path, resulting in higher transmission efficiency and less transmission latency.

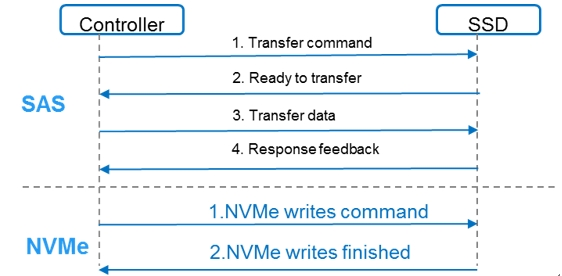

Second, at the software protocol parsing layer, SAS- and NVMe-based AFAs differ greatly in protocol interaction technologies for data writes. A complete data write request requires 4 protocol interactions in SCSI protocol (connected through SAS back-end); however, the NVMe protocol requires only 2 protocol interactions to complete a write.

Protocol parsing: SAS vs. NVMe

Third, at the protocol encapsulation layer, when SAS protocol stacks are used, I/O requests are sent from block devices and reach SSDs through SAS links after the encapsulation of two layers (SCSI and SAS protocols). However, when the NVMe protocol stacks are used, I/O requests, however, require the encapsulation of only one layer (NVMe protocol). Simplified NVMe protocol stacks lower encapsulation costs by 50%, thereby reducing CPU consumption and I/O transfer latency caused by each encapsulation.

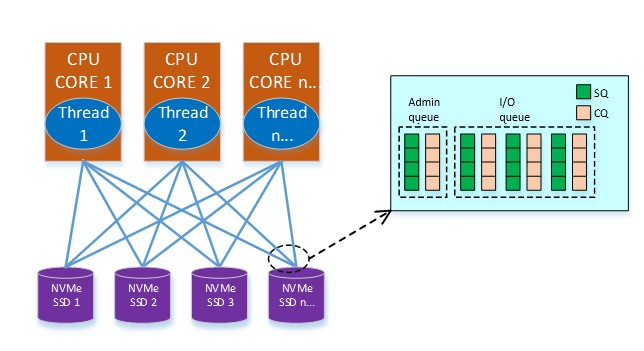

Fourth, at the multi-queue concurrency layer, the SAS protocol supports single queue, while the NVMe protocol supports up to 64 K queues, with each queue supporting a maximum of 64 K concurrent commands. Multi-queue NVMe protocol performs better thanks to higher concurrency processing and better cooperation with multiple channels and multiple dies in SSDs.

Fifth, at the lock mechanism layer, the single-queue SAS programming must be locked in the multi-core environment. Huawei designed an I/O scheduling mechanism for NVMe to completely cancel the disk-level mutex in original I/O paths and avoid I/O processing conflicts. This mechanism gives full play to the concurrent processing of multi-core processors, reduces software overheads, and improves back-end processing performance. In I/O scheduling, multiple threads work with multi-queues to achieve optimal performance.

I/O scheduling of the NVMe system

Sixth, at the OS optimization layer, storage OSs designed for flash innovate the disk-controller coordination algorithms.

Be stable, be perfect

Because of the advantages mentioned previously, customers choosing or creating new storage protocol are likely to be attracted to the many benefits of NVMe protocol. Despite offering superb performance unseen so far in the industry, the NVMe protocol has new challenges for disk and system design.

As one of the leading brands to successfully develop stable AFAs, Huawei continues to showcase its capabilities, evident in over 1000 NVMe all-flash success stories.

First, at the interface layer, Huawei NVMe SSDs support native dual-port technology, with two independent PCIe 3.0 x2 links. This provides hardware basis for system recovery and exceptions, and ensures dual-controller redundancy, helping improve the system reliability for enterprises.

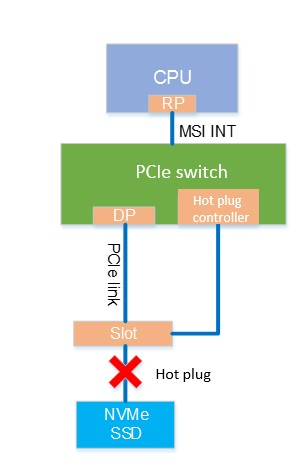

Second, at the hot plug layer, Huawei is an expert in the telecommunication industry, especially regarding comprehensive PCIe link management, PCIe troubleshooting technology, and hot plug technology. The PCIe driver is designed to support SSD removal anytime and anyway, providing end-to-end PCIe system reliability if a single disk is replaced or a fault occurs.

NVMe SSD removal diagram

Third, at the data protection layer, Huawei’s innovative RAID-TP software technology is based on the Erasure Code (EC) algorithm. Parity bits support 1-, 2-, 3-dimensions and can tolerate 1 to 3 simultaneous disk failures. This means that in the case of three disk failures, the system will not suffer from data loss or service interruption. Currently, only products from Huawei, NetApp, and Nimble can tolerate simultaneous failures of three disks; and other vendors (such as Dell EMC, HDS, and IBM) are unable to make this claim.

Although NetApp and Nimble can tolerate simultaneous failures of three disks, they both use traditional RAID architecture with fixed data disks and hot spare disks. For these companies, hot spare disk reconstruction for 1 TB of data takes 5 hours. OceanStor Dorado employs a global virtualization system able to reconstruct the data in a mere 30 minutes to fulfill requirements in ultra-large capacity profiles.

Fourth, at the cross-site data protection layer, Huawei NVMe all-flash storage provides comprehensive data protection technologies, such as snapshot, clone, and remote replication, to help customers build a hierarchical data protection solution from local or intra-city DCs to remote DCs. Huawei is a revolutionary member in implementing a gateway-free active-active solution in all-flash storage.

Get with the trend, prepare for the future

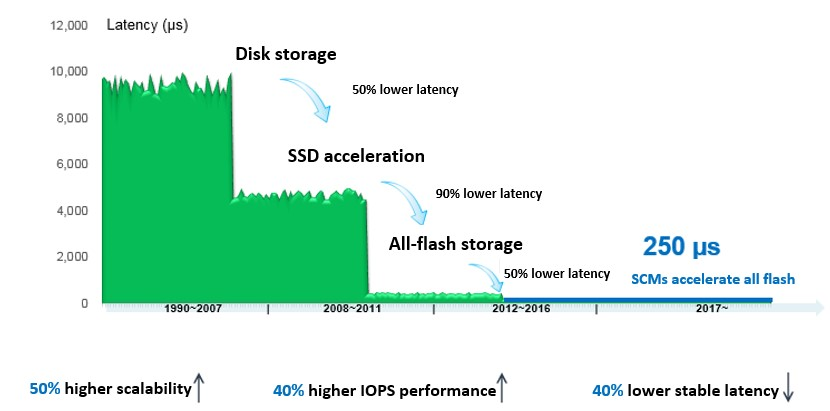

Traditional HDD storage has a latency of more than 10 ms due to the long seek time. However, SSDs reduce 50% of the storage system latency to about 5 ms by using electronic mapping tables.

Traditional storage controllers often provide the same OSs despite differences in HDDs disk form, meaning it is convenient to use even when the disk type changes. However, many of the HHDs and subsequent OSs have become redundant. That is why Huawei released AFAs, such as Pure Storage and OceanStor Dorado V3. Designed for SSDs, these AFAs effectively reduce the storage system latency to less than 1 ms.

In the future, faster storage media will undoubtedly be the next move for many enterprises looking to capitalize on innovative storage methods. Such is the benefits of using modern technologies. There is a large performance gap of 2 to 3 orders of magnitude between DRAMs and NAND SSDs, and even more between SCMs and DRAMs. All-flash storage using SCMs has the latency low to 250 μs, which ensures faster service response.

SCMs accelerate Huawei’s all-flash storage

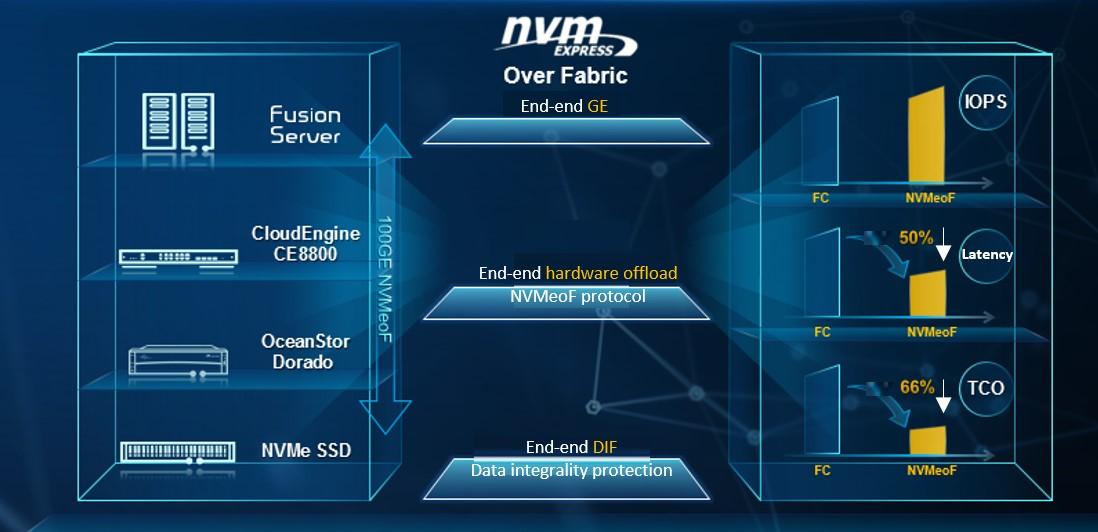

In addition to NVMe application in the local PCIe SSDs, NVM Express have also released the NVMe over Fabrics specification in June 2016. The new specification enables NVMe to be utilized over different fabric types, such as RDMA and FC, which can provide high-performance solutions for remote access to SSDs and remove resource sharing barriers among local SSDs.

Huawei uses NVMe over Fabrics to fully share SSD resources, and provides 32 Gbps FC and 100 GE full-IP networking design for front-end network connection, back-end disk enclosure connection, and scale-out controller interconnection. These functions decrease the storage latency and simplify storage network management by using one IP system to control the whole DC. This design avoids complex network protocol and planning, streamlines DC deployment, and reduces DC maintenance costs.

Huawei supports NVMe Over Fabric E2E solution

New SCM media are introduced to further improve system performance. With NVMe over Fabrics, SSD resources are fully shared, and front-end NVMe interfaces optimize hardware and software architectures. Ready to build the more competitive all-flash storage? Then think Huawei.

Disclaimer: The views and opinions expressed in this article are those of the author and do not necessarily reflect the official policy, position, products, and technologies of Huawei Technologies Co., Ltd. If you need to learn more about the products and technologies of Huawei Technologies Co., Ltd., please visit our website at e.huawei.com or contact us.