This site uses cookies. By continuing to browse the site you are agreeing to our use of cookies. Read our privacy policy>

![]()

This site uses cookies. By continuing to browse the site you are agreeing to our use of cookies. Read our privacy policy>

![]()

Продукты, решения и услуги для предприятий

Смартфоны, ПК и планшеты, носимые устройства и многое другое

Once the cornerstone of the industrial era, electric power has now taken a backseat, giving way for computing to fulfill an equivalent role in today's digital world. Computing power, of course, is fueled by data centers that store, analyze, and calculate massive volumes of data, across the entire data lifecycle.

To better mine value from data, an ever sharper focus is being placed on achieving ever greater computing power. Yet, merely increasing the scale of Data Center Networks (DCNs), in pursuit of such growth, is no longer enough. In fact, the strength of a DCN actually now lies in its computing power.

Computing power is typically used to evaluate the extent to which a server processes data, and to what degree computing, storage, and network resources are collaboratively orchestrated. In short, in order to deliver high computing power, the DCNs that connect various types of data center resources need to ensure efficient, smooth data flows.

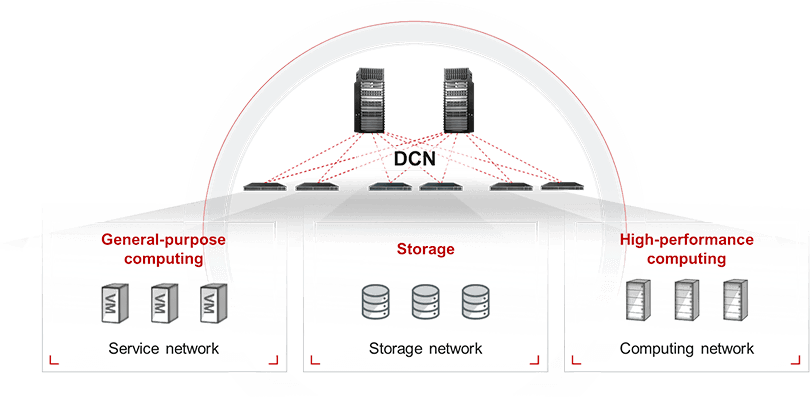

Depending on connected resources, DCNs are classified into three key categories. General-purpose computing networks provide services for external user terminals. Storage networks are responsible for the storage, read and write, and backup of data. And High-Performance Computing (HPC) networks connect Central Processing Unit (CPU) and Graphics Processing Unit (GPU) servers in order to perform HPC and Artificial Intelligence (AI) training.

In order to accelerate the flow and handling of data, these three types of networks must collaborate with each other. Only then can computer power reach its true potential. However, these network types are built over different protocols. Specifically, general-purpose computing networks are usually built on the open Ethernet protocol, while Fibre Channel (FC) and InfiniBand (IB) are adopted to construct conventional centralized storage networks and HPC networks, respectively. With three siloed network architectures, computing power and data flow are sacrificed. But network convergence must be achieved.

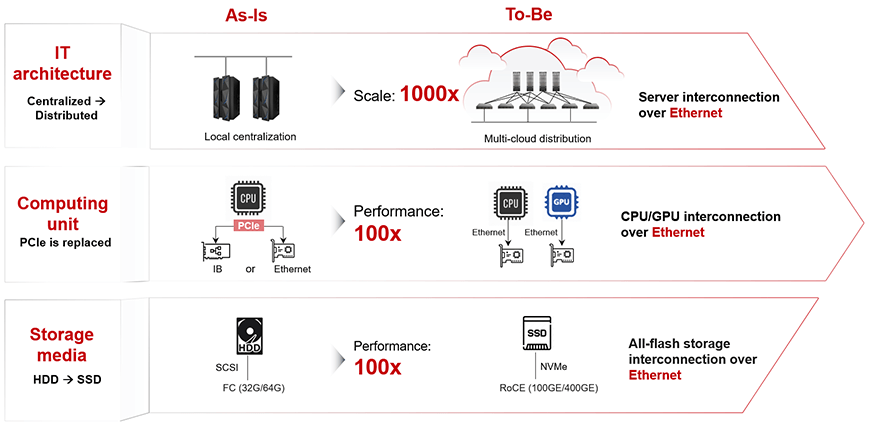

DCNs ignite the vitality of cloud applications as well as computing and storage resources, driving transformation forward. This, in turn, pushes DCNs toward embracing all-Ethernet adoption. Applications and resources are, as a result, undergoing three major transformations.

• Cloud-based upgrade: As industry services migrate to the cloud, the Information Technology (IT) architecture of enterprises, big and small, are evolving from locally centralized to distributed, on one or even multiple clouds. Open Ethernet architecture is highly interoperable, scalable, and agile, and can be flexibly invoked by the cloud, while also delivering high-level security in multi-tenant scenarios. These factors ultimately position Ethernet as a great choice for building general-purpose computing networks.

• All-flash storage: As Hard Disk Drives (HDDs) upgrade toward all-flash, the read and write performance of storage media is improved by a factor of 100. This exceeds the bandwidth upper limit — 32G and 64G — of FC networks, raising a new question: how can the throughput requirements of the all-flash era best be met? To find the answer, the industry has turned its attention toward Ethernet, which can deliver up to 400 GE bandwidth and therefore serve as a new standard for next generation storage networks.

• Replacing PCIe: To overcome bus speed limitations and provide higher computing power, some CPU and GPU vendors are replacing the Peripheral Component Interconnect express (PCIe) bus with Remote Direct Memory Access over Converged Ethernet (RoCE) interconnection.

To take full advantage of the transformations that are underway, it has become clear that all-Ethernet adoption is now the best approach. According to the TOP500 list, which ranks supercomputers around the world, Ethernet has replaced IB and has effectively been the standard since 2016. Indeed, in 2019, Intel halted the development of its Omni-Path 200 Fabric. And in 2021, the company launched Ethernet switches tailored for HPC scenarios. In addition, with new test specifications and standards released in line with all-Ethernet DCN development, new opportunities for convergence are at hand.

Although the industry is generally optimistic about the shift to all-Ethernet, it nevertheless remains difficult to deliver high network performance and build mature management systems on this new architecture, resulting in three major challenges.

• Packet loss: HPC and high-end storage networks are extremely sensitive to packet loss, and Ethernet is particularly susceptible to suffering from the problem.

• Management efficiency: Traditionally, data centers are separately managed by independent mapping tools and platforms, which become less effective as data centers grow in intensity and scale, adversely affecting network Operations and Maintenance (O&M).

• Multi-cloud and multi-scenario: To guarantee the stability of core services and rapidly respond to service changes, enterprises prefer to deploy agile services on public clouds and stable services on private clouds, leading to distributed data center infrastructure across clouds. In addition, with the digital era now upon us, service scenarios are growing in complexity and diversity, placing higher requirements on network openness and servitization.

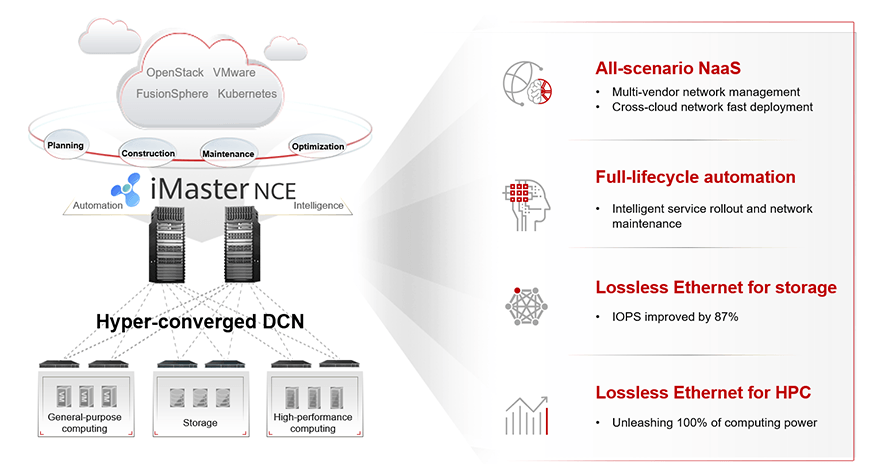

To overcome the challenges that all-Ethernet adoption presents, Huawei launched its CloudFabric 3.0 Hyper-Converged DCN Solution, drawing on feature-rich CloudEngine series data center switches and iMaster Network Cloud Engine (NCE), an intelligent network management and control system. Based on three-layer DCN convergence, the solution maximizes data flows and handling efficiency and fully unleashes the computing power of data centers, offering a range of key benefits.

• Lossless Ethernet architecture: Unifies the three siloed network architectures and converges the transmission of the diverse traffic on them.

• Full-lifecycle automated management: Converges network management, control, and analysis, and integrates the data from a wide range of management platforms and tools.

• All-scenario Network as a Service (NaaS): Converges multiple service scenarios and centralizes computing power across regions and scenarios.

Lossless Ethernet for HPC: Unleashing 100% of Computing Power

In traditional Ethernet scenarios, a packet loss rate of just 0.1% can reduce computing power by an astonishing 50%. As such, over the past four decades, vendors worldwide have proposed many approaches to eliminate Ethernet packet loss. One of which — the flow control and backpressure approach — is used to control packet transmission speed. However, this approach frequently suspends packet transmission, leading to ultra-low throughput.

Furthermore, as network application traffic continues to grow in diversity and complexity, controlling packet transmission speed becomes less practical. That's where Huawei's innovative iLossless-DCN algorithm steps in, implementing real-time and precise speed control, eliminating packet loss, and doubling computing power without increasing the network scale.

Lossless Ethernet for Storage: Improving Performance by 87%

Among all service scenarios, financial active-active data centers have the most stringent requirements on storage network performance. In a city, data centers may be 30–70 km away from each other. Transmitting data through optical fibers over such distances often results in a static delay of approximately 5 μs/km, which only increases as the distance grows. For this reason, the delay of long-distance transmission is 100 times that of short-distance transmission, significantly increasing the complexity of flow control.

To resolve this issue, Huawei has added distance variables to its short-distance lossless transmission algorithm and created the iLossless-DCI algorithm, precisely for long-distance transmission scenarios. This exclusive algorithm predicts and copes with traffic changes based on big data analytics, achieving lossless data transmission traveling up to 70 km at 100 Gbit/s. In addition, the storage networks built on Huawei's CloudFabric 3.0 Solution require 90% fewer links, improve Input/Output Operations Per Second (IOPS) by 87%, and reduce network delay by 42% compared to FC networks.

Full-Lifecycle Automation, Service Rollout in Seconds, and "1-3-5" Intelligent O&M

In most DCNs today, configurations can indeed be automatically delivered, but network design and verification still heavily rely on manual operations. Huawei redefines this trend by introducing the concept of a digital twin to network management, enabling full-lifecycle network automation. Applying digital network modeling, Huawei's CloudFabric 3.0 Solution comprehensively evaluates over 400 network design factors, recommends optimal network design solutions based on the results, and then verifies configuration changes in seconds.

In particular, the solution provides a network knowledge graph to achieve "1-3-5" intelligent O&M: faults are detected in 1 minute, located in 3 minutes, and rectified in 5. In addition, with big data mining and modeling, Huawei's CloudFabric 3.0 Solution detects correlations between network objects and fault spreading rules to accurately detect 90% of potential faults.

All-Scenario NaaS: Slashing the Cross-Cloud Service Deployment Time from Months to Just Days

Heterogeneous networks are commonly used in multi-cloud scenarios, and multiple sets of controllers need to be deployed to manage devices from different vendors. In such scenarios, a service change cannot be made with just one controller. If the controllers are unable to handle service changes, device vendors need to step in and update the controllers, which takes three to six months on average. Worse, these controllers also need to be connected to cloud management platforms, resulting in complex adaptation workloads.

To address this very issue, Huawei's CloudFabric 3.0 Solution defines a unified network element model and builds an open southbound framework to centrally manage multi-vendor devices and dynamically load device drivers. In addition, the solution provides thousands of network Application Programming Interface (API) services in the northbound direction, enabling flexible network orchestration on the cloud management platform and slashing the service rollout time from as long as several months to just one week.

Data centers integrate extensive software and hardware resources, from chips, servers, storage devices, and network facilities, to platform and application software. To deliver high computing power, these resources must be highly coordinated and converged. As a frontrunner in DCN convergence, Huawei's CloudFabric 3.0 Hyper-Converged DCN Solution extends the lossless Ethernet and Autonomous Driving Network (ADN) capabilities of CloudFabric 2.0 to the all-Ethernet era. Built on a three-layer converged architecture, this solution dramatically improves the efficiency of data flows and handling, unleashes 100% of computing power, and lays a solid foundation for the digital economy and enterprise digital transformation.

Disclaimer: The views and opinions expressed in this article are those of the author and do not necessarily reflect the official policy, position, products, and technologies of Huawei Technologies Co., Ltd. If you need to learn more about the products and technologies of Huawei Technologies Co., Ltd., please visit our website at e.huawei.com or contact us.